Finding The Gateway To Enterprise AI Adoption

Enterprises that are leading the way in AI adoption recognize that the Model Context Protocol (MCP) is the key to unlock AI’s long-promised rewards.

Without MCP, AI agents are marooned on an island, cut off from the tools and data they need to work and act rather than just answer questions.

With MCP, AI agents can get hands-on, carrying out tasks using multiple apps, analyzing data directly, summarizing information, synthesizing data, and coordinating tasks across business systems and data to help your teams achieve new levels of productivity.

However, unlocking these capabilities creates a proportional risk and a novel, complex attack surface that existing security systems cannot protect your organization against.

MCP Manager’s gateway centralizes, moderates, and secures all your organization’s MCP traffic, so that you can reap the benefits of this exciting new technology in a secure, scalable, controlled way.

What Is The Model Context Protocol (MCP)?

MCP is like the lingua franca or universal connector for AI. It’s allows AI agents to communicate and interact with software and “resources” including:

- Custom/In-house enterprise software

- Other internal systems

- Third-party software (such as CRM systems and project management apps)

- Email clients and calendar services

- File servers and databases

MCP brings AI out of its silo, integrating it with the tools and data that your organization uses, whether these are systems provided by an external vendor, such as Jira or Salesforce, or a resource you have built internally.

MCP’s universality also enables AI agents to seamlessly move through different resources without needing unique connectors or APIs for each resource. This allows agents, or teams of agents to tap into all the required tools and data that they need to complete complex workflows with many steps, or to gather insights from a variety of data sources, to produce genuinely valuable returns, for organizations.

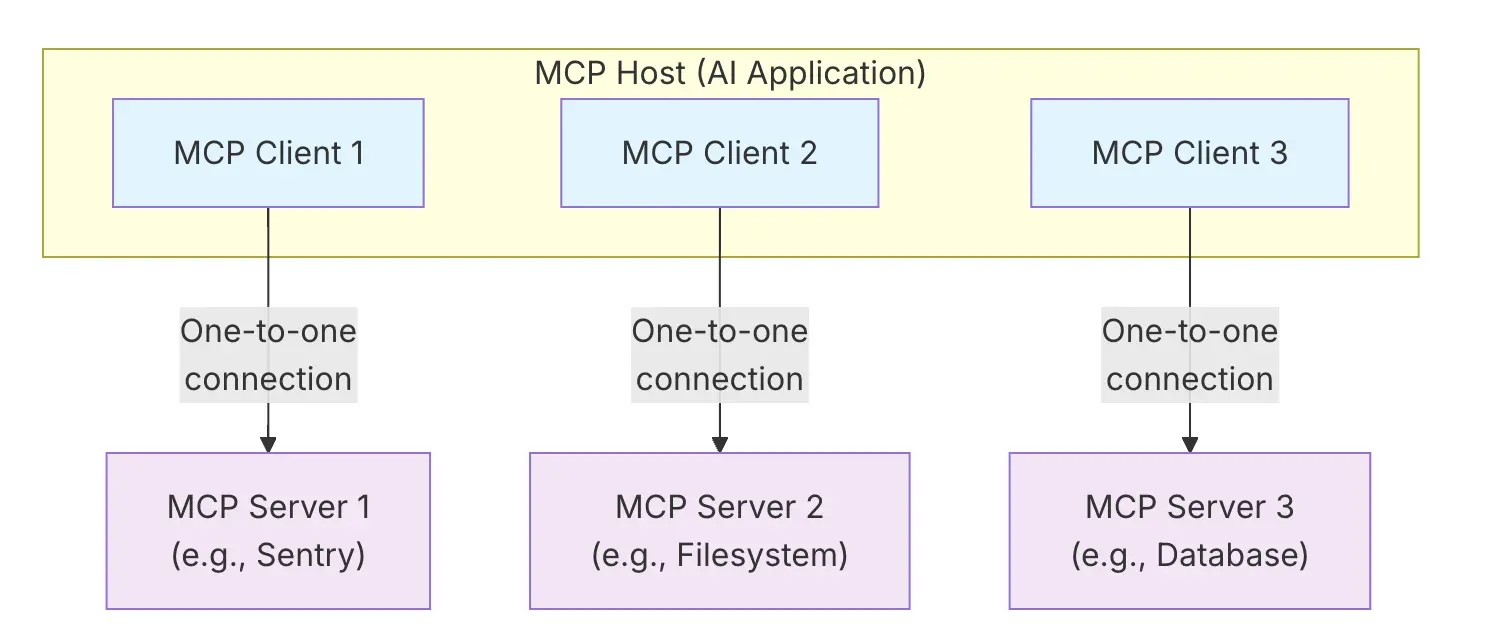

MCP consists of two main components:

MCP Servers

The entry point that allows MCP clients to access and work with an application or dataset. Companies like Atlassian, Asana, and Microsoft already provide MCP servers for LLMs to connect to their apps, while independent developers are also creating their own MCP servers. MCP servers expose capabilities as three abstractions:

- Tools: Invocable actions, such as “send_email” or “create_project”.

- Resources: Static or dynamic data (such as documents or databases) that provide added context for AI agents.

- Prompts: Preconfigured, parametrized templates that provide guidance for tasks to help AI agents use tools and resources more effectively.

MCP Clients

The LLMs you directly interact with, like ChatGPT and Claude. This is where human users ask questions, give instructions, and receive responses from the AI.

Without MCP, your LLM is just a question-and-answer machine using information it’s already consumed. With MCP, your AI agents have real agency. They gain the ability to take real, chained actions using all the tools they needs to be genuinely productive and deliver the promised value of agentic AI.

MCP Servers – An Improved Alternative To APIs

Teams within enterprises are increasingly creating their own MCP servers to expose internal services instead of using traditional APIs.

The initial motivator for this was teams figuring out how to ready their internal services for agentic AI. However, they quickly realized that MCP servers have a number of benefits compared to using APIs, including:

Universal, Transferable Communication Method

The work to expose a service via an MCP server is a one-off task, which is far more efficient than creating an API wrapper for each connecting/consuming application. Using MCP servers instead of traditional APIs greatly is much less burdensome for internal engineering teams.

Simplified and Robust

Connections via traditional APIs are tight, precise, and distinct to each connecting application, like a complex tangle of cables that you need to connect to the precise sockets you need. As you can imagine this creates many failure points, and requires ongoing maintenance and updates for each connecting application’s API. In MCP the connection surface for connecting clients is simplified, and standardized, into a single integration pattern.

Session-Based Communication

Traditional APIs provide stateless, single request-response transactions. Multi-step operations require back and forth interactions where all state and context is managed by the connecting client.

In short, APIs have no memory or context. In contrast, MCP servers maintain session-state and context, enabling real-time collaboration between AI agents and your internal/external systems. this enables far more complex operations, using multiple tools, and taking dynamic paths – rather than relying only on pre-defined workflows.

Dynamic Architecture

Traditional APIs expose predefined endpoints with precise functionality. MCP servers expose dynamic tool discovery, enabling clients and agents to peruse and select the best tool -or combination of tools – for the job. This opens up an exponentially larger set of capabilities compared to hard-coded, traditional APIs.

Flexible, Adaptable, Scalable

Updates to traditional APIs can require risky and slow system-wide changes. Instead, using MCP servers teams can make service specific updates without rewriting code en-masse, which makes those updates faster and reduces system-wide outages.

An AI-Ready Connection Method

Finally, it should be reiterated that MCP servers are designed to enable AI agents to connect and collaborate dynamically with resources like internal services, apps, and databases. MCP servers free teams up from the requirement to create custom API connections for each service and service consumer, which would’ve drastically reduced the adoption speed and return on investment of enterprise agentic AI.

With Great Power Comes Great…Risks

Giving AI agents the power to access your systems and data, in both remote and local deployments, unlocks exponential improvements in productivity, but this power can also be turned against your organization.

While AI agents can execute much faster than people, they still lack the most basic levels of judgment that we would expect from the freshest-faced junior employees.

AI agents can’t easily discern between malicious and innocent instructions based on their consequences, making them exceptionally vulnerable to manipulation by hackers. Agents are also prone to misinterpreting genuine, innocent instructions with dire consequences, such as deleting or changing system files, sharing sensitive data, or wiping customer data.

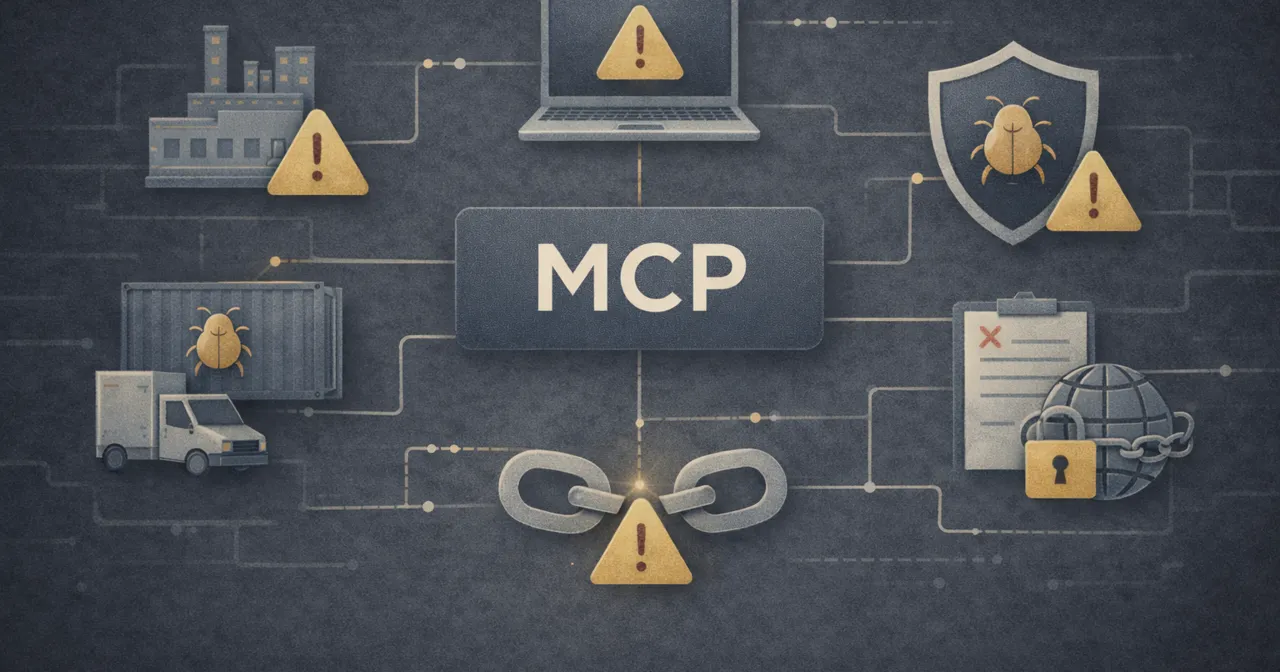

MCP puts all our company systems and data in the hands of these unscrupulous and powerful agents, which creates a new range of novel, high-risk security concerns, including:

Prompt Injection & Tool Poisoning

Attackers are hiding malicious commands in the metadata of MCP tools and even in their outputs, such as error messages. Various test cases have demonstrated the effectiveness of these attack methods, the variety of locations to hide malicious commands, and how easily attackers can insert malicious commands into previously considered “safe” servers (known as a MCP “rug pull” attack).

Retrieval Agent Deception (RADE)

RADE is another form of prompt injection, but it works by hiding malicious commands within documents, webpages, databases, and other sources that the agent retrieves when working on a task.

Configuration Mistakes

Researchers have identified configuration flaws in MCP servers from major SaaS vendors that could lead to data leaks or unauthorized access. For example, Asana had to take their own MCP server offline after discovering that a misconfiguration of tenancy isolation meant users could access other organizations’ data and projects.

Cross-Server Shadowing

A malicious MCP server includes instructions that modify how the LLM interacts with another MCP server or tool. For instance, in one real-world example, attackers deployed a malicious MCP server to trick an AI agent into forwarding them someone else’s private WhatsApp messages whenever that agent accessed the WhatsApp MCP server’s “send_message” function.

Token Theft and Account Takeover

The management of access tokens and credentials is patchy and immature in MCP systems, creating numerous opportunities for attackers to intercept, steal, and exploit these tokens and credentials to access your business systems and data. Once inside, attackers can exfiltrate sensitive data, hold data hostage, and execute malicious commands at will.

The Solution: A Single Gateway for All Your MCP Servers

MCP gateways, such as MCP Manager, centralize and control the provision of and access to MCP servers. MCP gateways sit between MCP clients and servers, monitoring, mediating, and securing their communications and interactions.

Using an MCP gateway enables you to:

- Centralize and organize access to all your organization’s MCP servers

- Neatly package, secure, and provision internally-built MCP servers

- Enforce fine-grained role-based access controls

- Implement enterprise identity management (including OAuth)

- Add runtime guardrails against dangerous behavior

- Enforce comprehensive security and compliance policies

- Block harmful prompts before they reach your LLM

- Mask or anonymize sensitive data

- Generate fully traceable end-to-end logs

- Optimize MCP tool usage to improve performance and reduce LLM fees

All of these capabilities enable you to capitalize on the benefits of AI and MCP servers in a secure, controlled, and scalable manner.

Using a gateway doesn’t slow down your AI adoption either; it facilitates it. A gateway prevents catastrophic security failures that could bring your AI rollout to a halt, reassures concerned stakeholders, and puts MCP servers in packaging that is accessible for non-technical users.

A gateway also transforms a mess of MCP servers strung out across your organization into a single organized hub, where administrator users can oversee, manage, and control all MCP servers and access to them.

In enterprise-level deployments with hundreds of MCP servers, LLMs often struggle to select the right tool for their task. They can get stuck in a loop, make poor decisions, and spend valuable, limited tokens assessing which tool is right for them.

MCP gateways improve your LLM’s effectiveness and efficiency by helping it to select the right tool for its task, handling session-level context, and consolidating server responses to reduce the burden on the LLM’s limited context window, giving it more free space to “think” and work.

Why Choose MCP Manager As Your MCP Gateway

MCP Manager started tackling AI & MCP security issues early. Our team has been working on integration technologies for over nine years, and we understand how to handle the complex challenges that MCP presents in controlling access, automating runtime interventions, system-to-system communication, and end-to-end observability.

Unlike other solutions, MCP Manager does not offer a partial or patched solution to the security and scalability concerns associated with using AI and MCP servers. MCP Manager is a comprehensive solution for controlling, securing, and optimizing the organizational adoption of AI, enabling its use without compromising security.

Get Started Today

The good news is that you don’t need to pull the brake lever on your AI and MCP deployment because of security risks. You can start using MCP Manager now to control and secure your organization’s AI and MCP rollout.

To get started, book an introductory call here. We will show you how MCP Manager works and answer any questions you may have.